Missing Data in Excel? 5 Ways to Fix It (Without Losing Accuracy)

Before you touch a single empty cell, you have to put on your detective hat. The first, and most important, step in dealing with missing data isn't figuring out how to fix it, but why it's missing in the first place.

This initial diagnostic work is everything. It guides every decision you make next and separates a trustworthy analysis from one that's built on a shaky foundation. Skipping this step is a huge gamble, and it almost never pays off.

Every blank cell in your spreadsheet tells a story. Generally, these stories fall into one of three categories, and each one has very different implications for your work.

Spending too much time on Excel?

Elyx AI generates your formulas and automates your tasks in seconds.

Try for free →The Three Patterns of Missing Data

It's tempting to think a blank cell is just a blank cell, but from a statistical perspective, that's not true. We need to figure out if there's a pattern to the madness.

-

Missing Completely at Random (MCAR): This is the best-case scenario, though it's pretty rare in the real world. Here, the fact that a value is missing has nothing to do with any other data, seen or unseen. Think of a survey respondent accidentally skipping a question. It's just a random slip-up, not part of a larger pattern.

-

Missing at Random (MAR): This one is more common and a bit more complex. The missingness can be explained by other information you do have in your dataset. For instance, in a patient survey, maybe men are less likely to answer questions about their emotional well-being. The missing data in the "emotional well-being" column is related to the "gender" column, but not to the well-being score itself.

-

Missing Not at Random (MNAR): This is the real troublemaker. The reason a value is missing is directly related to the value you can't see. The classic example is an income survey where people with very high salaries are the most likely to leave the "income" field blank. The absence of the data is tied directly to what that data would have been.

Getting these distinctions right is fundamental. A common approach is to just delete any row with a missing value—a method called complete case analysis (CCA). This only works without introducing bias if your data is genuinely MCAR or if the missingness has zero connection to the outcome you're studying.

If you don't understand the context, you're flying blind. Imagine your data is MNAR (like the income example) and you just delete all the rows with missing income. You've just systematically removed all the high-earners from your dataset. Any analysis you run on income will now be completely skewed.

To help you get a quick handle on these concepts, here’s a simple breakdown.

Understanding the Three Types of Missing Data

| Type of Missingness | Description | Example Scenario | Primary Risk if Handled Incorrectly |

|---|---|---|---|

| MCAR | The missingness is a purely random event and has no relationship with any other variable. | A data entry clerk randomly skips a few cells when transcribing paper records. | Loss of statistical power due to a smaller sample size, but results remain unbiased. |

| MAR | The probability of a value being missing depends on other observed variables in the dataset. | In a fitness app, users over 50 are less likely to log their high-intensity workouts. | Simple deletion (CCA) will introduce bias. For example, your analysis would underestimate workout intensity for older users. |

| MNAR | The probability of a value being missing is related to the unobserved value itself. | Patients with severe symptoms are less likely to fill out a "daily pain level" survey. | The most severe type of bias. Your analysis will systematically underrepresent the very group you're interested in (e.g., those in severe pain). |

Recognizing which pattern you're dealing with is the critical first step. It helps you choose a strategy that actually preserves the integrity of your data.

Over the last 20 years, much more sophisticated methods like multiple imputation (MI) have become the gold standard for handling MAR data far more effectively than just deleting rows. In fact, research shows that analysts frequently misunderstand when it's safe to use simple deletion. You can dig into a detailed comparison of these statistical methods to see just how much of a difference the right technique can make.

How to Find and Visualize Missing Data in Excel

You can't fix a problem you can't see. Before you even think about which imputation method to use, your first job is to hunt down every single blank cell and get a real sense of how big the issue is. Excel actually has some great, and often forgotten, tools that make this whole diagnostic process surprisingly visual and straightforward.

A fantastic place to start is with Excel's Go To Special feature. I use this all the time for a quick-and-dirty way to highlight every empty cell in one shot, giving me an instant map of all the gaps.

It's simple to do:

- Select the entire data range you need to check.

- Hit F5 (or Ctrl+G) to bring up the "Go To" box.

- Click the "Special…" button in the corner.

- From the new menu, just choose the Blanks option and hit OK.

Just like that, Excel selects every empty cell in your range. I usually fill them with a bright color right away. This one small step turns an invisible issue into a clear, tangible target you can focus on while you clean up the data.

Make Missing Values Impossible to Ignore

Highlighting cells is a good first pass, but when you're staring down a massive dataset, you need something more dynamic. This is where Conditional Formatting becomes your best friend. You can set up a simple rule that automatically paints any blank cell a certain color, making missing data jump off the screen as you scroll.

Let's say you're looking at a customer feedback table, and the "Satisfaction Score" column is full of holes. By setting a rule to fill blank cells in that column with a bright red, you immediately see every incomplete entry. This can help you spot patterns you would have completely missed otherwise.

Visualizing missing data isn’t just about making your sheet look colorful; it's about making the problem undeniable. A color-coded heatmap of your data can instantly show you if missing values are clustered in specific rows or columns, which often gives you clues about why the data is missing in the first place.

To make sure your visuals are actually helping and not just adding noise, it's worth brushing up on these 8 Essential Data Visualization Best Practices.

Quantify the Problem with a PivotTable

Okay, so highlighting shows you where the gaps are. But a quick PivotTable will tell you how bad the problem is. Getting a high-level summary is essential for figuring out where to focus your cleaning efforts.

Here’s a quick way to throw one together to assess the damage:

- Create a new PivotTable from your source data.

- Drag the field you're curious about (like 'Customer Age') into the Rows area.

- Then, drag that same exact field into the Values area—do this twice.

- Configure the first value field to Count and the second one to show % of Grand Total.

The resulting PivotTable will have a row conveniently labeled "(blank)" along with its count and percentage. Now you know exactly what portion of that column is empty. If that table tells you 35% of your 'Customer Age' data is missing, you know you've got a serious issue on your hands that probably can't be fixed by just deleting rows.

Choosing Your Strategy: Deletion vs. Imputation

Once you’ve hunted down all the blank cells in your dataset, you're at a fork in the road. Do you take the quick and easy path of deleting the incomplete data, or do you take the more thoughtful route of imputation? This isn't a small decision—it fundamentally shapes the reliability of your final analysis. Your best move depends on how much data is missing, why it’s missing, and what you’re trying to achieve.

Deletion is the most straightforward approach. You're essentially just removing data points to get a "clean" dataset to work with. It's fast and simple, but if you're not careful, it can do more harm than good.

When Deletion Makes Sense

You can go about deleting data in a couple of ways:

- Listwise Deletion: This is the most common (and aggressive) method. If a row has even a single missing value, the entire row gets the boot.

- Pairwise Deletion: This is a bit more nuanced. Instead of tossing the whole row, you only exclude it from calculations where the specific missing value is needed. The rest of the data in that row can still be used for other parts of your analysis.

So, when is it actually safe to just delete rows? I only recommend it when two specific conditions are met. First, the amount of missing data has to be tiny—most pros I know stick to a rule of less than 5% of the total dataset. Second, the data needs to be Missing Completely at Random (MCAR), which means there's no rhyme or reason to why the data is missing.

Think about it this way: if you have a massive customer database with millions of records, deleting a few hundred incomplete entries that are scattered randomly is probably fine. It’s unlikely to skew your results.

But here’s the problem: too many people default to deletion without thinking it through. It’s a bad habit. A 2022 study on how researchers handle this exact problem was pretty eye-opening. It found that a staggering 72.6% of papers just used listwise deletion, a technique that's really only valid under that strict MCAR assumption. You can dig into the full research about these data handling choices to see just how common this issue is.

A Word of Caution: Think of deleting data like performing surgery. Sometimes it's a necessary fix, but if you cut too much, you can seriously damage the patient. Deleting more than a small fraction of your data means you're throwing away valuable information and creating a sample that no longer represents the real world.

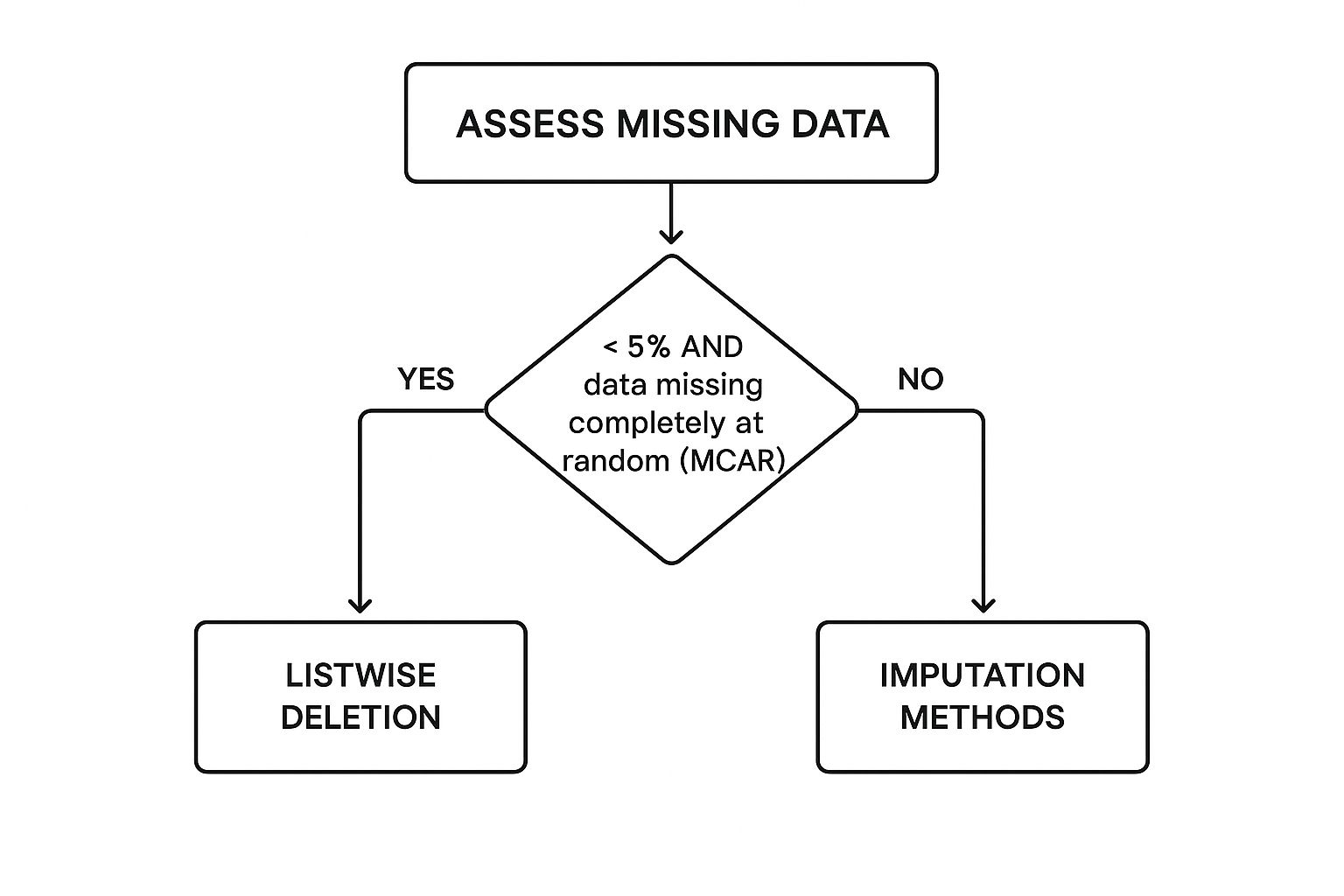

Deciding Between Deletion and Imputation

When deleting rows feels too risky, imputation is your next best friend. Imputation is just a fancy word for filling in the blanks with estimated or calculated values. It’s more work, sure, but it preserves your sample size and helps you avoid the bias that deletion can introduce, especially when your data is Missing at Random (MAR) instead of completely at random.

This decision tree gives you a straightforward way to think through the problem when you first find those gaps in your data.

As you can see, imputation isn't just a backup plan. It should be your go-to strategy unless you meet those very specific criteria for safe deletion. The chart is designed to steer you away from the knee-jerk reaction to just delete everything and push you toward methods that actually protect the integrity of your data.

If your situation doesn't fit into that narrow little box for deletion, it's time to roll up your sleeves and start exploring imputation techniques.

Practical Ways to Fill in Missing Data in Excel

So, you've found the gaps in your data, but deleting those rows would mean losing too much valuable information. What's the next move? It's time to talk about imputation, which is just a technical term for filling in those blanks with educated guesses. This keeps your dataset whole and lets you move forward with your analysis.

It might sound intimidating, but you can pull off some surprisingly effective imputation methods right inside Excel with formulas you already know.

The simplest approach is to replace the empty cells with a single, calculated value. These are quick, easy fixes that are perfect for straightforward numerical or categorical data.

Simple Statistical Fixes

These are the go-to methods for a reason—they're quick, easy, and often good enough, especially when you're just starting out.

- Mean Imputation: This is your best bet for numerical data that doesn't have wild outliers. You just calculate the average of the entire column and use that number to fill in any blanks.

- Excel Formula:

=IF(ISBLANK(A2), AVERAGE(A:A), A2)

- Excel Formula:

- Median Imputation: Got outliers? Use the median. The median is the middle value in a dataset, so it isn't skewed by a few unusually high or low numbers, making it a much safer choice in those situations.

- Excel Formula:

=IF(ISBLANK(A2), MEDIAN(A:A), A2)

- Excel Formula:

- Mode Imputation: When you're dealing with non-numeric, categorical data (like "Department" or "Product Category"), the mode is the answer. It finds the most frequently occurring value in the column and plugs that into the gaps.

- Excel Formula:

=IF(ISBLANK(A2), MODE.SNGL(A:A), A2)

- Excel Formula:

These simple methods are incredibly common, especially when a dataset has less than 10% of its information missing. The major catch, however, is that they can artificially shrink the natural variation in your data, which might hide or distort the real relationships between your variables.

My Two Cents: I never, ever apply an imputation formula directly over my original data. Always, always create a new column for the imputed values. This way, you have a clean "before and after" to compare, and you can easily ditch the changes if they don't look right.

A Practical Comparison of Imputation Methods

Choosing the right imputation method can feel a bit like a guessing game. To make it easier, I've put together this table that breaks down the common techniques I use, explaining what they do, when to use them, and what to watch out for.

| Imputation Method | How It Works | Best For | Potential Drawback |

|---|---|---|---|

| Mean Imputation | Fills blanks with the column's average. | Normally distributed numerical data without outliers. | Reduces data variance and can be skewed by extremes. |

| Median Imputation | Fills blanks with the column's middle value. | Numerical data with outliers or a skewed distribution. | Also reduces variance, but is more robust to outliers. |

| Mode Imputation | Fills blanks with the most frequent value. | Categorical (non-numeric) data. | Can create a misleadingly large group of a single category. |

| Regression | Predicts missing values based on another related column. | When a strong linear relationship exists between variables. | Assumes a linear relationship that may not exist. |

Ultimately, the best method depends entirely on the nature of your data and what you're trying to achieve with your analysis. Start simple, and only add complexity if you need to.

Getting Smarter with Regression Imputation

What if you have a hunch that two of your columns are related? This is where you can get a little more sophisticated with linear regression. This technique predicts a missing value based on its relationship with another, complete column.

Let's say you're missing entries in a "Years of Experience" column, but your "Age" column is complete. It's a safe bet these two are connected. You can use Excel’s FORECAST.LINEAR function to predict the missing experience based on the corresponding age.

To make this work, you'll need to point the formula to your known x values (Age) and your known y values (Experience). Excel builds a small predictive model on the fly and uses it to estimate the missing y value for any given x.

- Excel Formula:

=FORECAST.LINEAR(B2, $C$2:$C$100, $B$2:$B$100)

(Here, the B column is Age, and the C column is the one with missing Experience values.)

This is a much more statistically sound approach than just plugging in the mean because it helps preserve the relationships within your data. The big "if," of course, is that it assumes the relationship is linear, which isn't always true. For more on getting your data ready for this kind of work, you can explore our in-depth guide on how to clean data in Excel.

Automating Data Cleaning to Save Time and Effort

Let's be honest: manually hunting down and fixing missing data in Excel is a grind. It works, sure, but it's a slow, mind-numbing process that steals time away from the real work. Every minute you spend wrestling with IF(ISBLANK()) formulas or calculating medians is a minute you're not spending on analysis or uncovering insights.

This old-school approach is also a minefield for human error, especially when your datasets get bigger. I've seen it happen countless times—one tiny mistake in a formula can quietly skew an entire analysis, and you might not catch it until it's too late.

Thankfully, there's a much better way to work. Instead of treating data cleaning as a manual chore, you can automate huge chunks of the process. What once took hours can now be done in just a few clicks. This isn't just about saving time; it's about making your data prep far more accurate and consistent.

The Power of Intelligent Cleaning Tools

Modern platforms are built to handle the entire data cleaning workflow. They can automatically scan your data, pinpoint different types of missing values, and suggest the right way to fill them based on the data's type and distribution. This is a massive leap forward from just guessing whether to plug in the mean or median.

The real game-changer with automation isn't just the speed. It’s about bringing sophisticated statistical methods to your fingertips. You can run complex strategies, like regression-based imputation, without writing a single line of code, which dramatically cuts down on manual mistakes and leads to more trustworthy results.

For instance, a smart tool might notice that your "Sales Volume" column has gaps but is closely related to a complete "Ad Spend" column. It could then recommend and perform a regression imputation—something that would be a real headache to set up from scratch in Excel. By automating these tasks, you also get consistency across all your reports. You can dive deeper into this in our guide to Excel report automation.

Here’s a glimpse of how a tool like Elyx.AI makes these automated cleaning choices clear and simple.

This kind of interface takes the guesswork out of the equation, letting you apply the best cleaning action for each column with total confidence.

A Smarter Way to Handle Missing Data

Instead of that tedious, multi-step manual process, your workflow becomes incredibly efficient.

- First, you just upload or connect your data.

- Then, you kick off an automated cleaning scan. The tool flags everything from missing values to typos and weird formatting.

- Next, you review the suggestions. The platform will recommend a fix for each column with missing data and explain why it’s a good fit.

- Finally, you apply the fixes with one click. You give the green light, and the tool cleans the entire dataset instantly.

This automated approach completely changes how to handle missing data. It turns a painful, error-prone chore into a fast, reliable step in your analysis. It frees you up to focus on what actually matters: understanding your data and making smart decisions.

Have Questions About Missing Data? You're Not Alone.

Even with the best game plan, you're bound to run into some head-scratchers when cleaning data. It's just part of the process. Working through these tricky spots is how you get really good at handling missing information. Let's dig into some of the questions I hear most often.

What's the "Magic Number" for Too Much Missing Data?

Everyone wants a single number, but honestly, there isn't one. While a common rule of thumb suggests that anything under 5% missing data is probably fine, the context is what truly matters. A rigid percentage can be misleading.

I always tell people to think about two things instead:

- How critical is the variable? Missing 20% of a minor data point might be a non-issue. But if you're missing just 3% of your most important outcome variable, that could throw your entire analysis into question.

- Why is it missing? A tiny amount of data that's Missing Not at Random (MNAR) can inject way more bias than a much larger chunk of data that's Missing Completely at Random (MCAR). You have to play detective and figure out the why before you decide on a threshold.

Can I Just Leave the Blanks?

This is a tempting shortcut, but it's rarely a good idea. When you leave cells blank, you're basically handing the reins over to your software, and that can lead to some seriously unpredictable results.

Different programs and models handle blanks in their own unique ways:

- Many statistical packages will just drop any row with a blank from the analysis entirely. Poof, gone.

- Some Excel functions or scripts might treat a blank as a zero, which will completely wreck your calculations for things like averages.

- Worst of all, some processes might just throw an error and crash.

It's always better to make a conscious choice, whether that's deleting the data or filling it in. This proactive approach is a huge part of maintaining solid data integrity. For a deeper dive, check out our guide on data quality best practices.

By actively choosing how to handle missing values, you stay in control of your analysis. It forces you to document your assumptions and makes your work transparent and repeatable—something you just can't do if you ignore the problem.

Is It Ever Okay to Just Delete Rows with Missing Data?

Yes, but it's a move you should make with extreme caution. As we touched on earlier, deleting the entire row (listwise deletion) can be an acceptable path, but only if you're confident it won't skew your results.

I only consider this approach safe when two conditions are met:

- The amount of data you're getting rid of is tiny (again, think less than 5% of your total dataset).

- You have a very strong reason to believe the data is Missing Completely at Random (MCAR).

Making deletion your default strategy is a recipe for trouble. You shrink your sample size, which weakens the statistical power of your findings, and you risk creating a dataset that no longer reflects the real-world population you're trying to understand. Treat deletion as a last resort, not your go-to move.

Reading Excel tutorials to save time?

What if an AI did the work for you?

Describe what you need, Elyx executes it in Excel.

Try 7 days free