What Is Natural Language Processing? A Complete Guide

Natural Language Processing (NLP) is a fascinating field of Artificial Intelligence (AI) that’s all about teaching computers to understand, interpret, and generate human language. Think of it as the bridge that finally lets us talk to our devices as naturally as we talk to each other.

So, What Is Natural Language Processing, Really?

At its core, NLP is about making computers fluent in our world. Human language is messy. It’s packed with nuance, sarcasm, slang, and tricky grammar rules—all things that are second nature to us but incredibly difficult for a machine to grasp. NLP gives computers the framework to navigate this complexity.

Spending too much time on Excel?

Elyx AI generates your formulas and automates your tasks in seconds.

Try for free →Let's take a simple example. You ask your phone, "What's the weather like in Chicago tomorrow?" Your device isn't just mindlessly matching keywords. It's using NLP to figure out your intent (you want a forecast), pull out the key entities (Chicago, tomorrow), and then piece together a coherent, spoken answer. That entire back-and-forth is pure NLP at work.

The Two Sides of the NLP Coin

NLP’s magic really comes from two distinct but related capabilities: one side is focused on understanding language, and the other is all about creating it. You need both for the kind of smooth interactions we’ve come to expect from modern tech.

Natural Language Processing enables conversational interactions with databases, allowing end-users to access data using natural language rather than complex SQL queries. This approach democratizes data access and alleviates the need for specialized expertise among general users.

This dual ability is what makes NLP so effective. It doesn’t just read; it comprehends and then responds. Let's break down how this works.

NLP essentially has two core functions: understanding what we say and then generating a sensible reply. The table below gives a quick overview of these two components.

NLP at a Glance: Understanding vs Generating Language

| Component | Objective | Example Task |

|---|---|---|

| Natural Language Understanding (NLU) | To comprehend the meaning of human language. This is the "input" side. | Analyzing the sentiment of a customer review or identifying the key people and places in a news article. |

| Natural Language Generation (NLG) | To create human-like text or speech from data. This is the "output" side. | Summarizing a long report, generating a product description, or answering a question in a chatbot. |

So, NLU figures out what you mean, and NLG crafts the response. Together, they create a complete, conversational loop.

Why Does NLP Matter So Much Right Now?

The importance of NLP has exploded because we're drowning in unstructured data. Think about it: emails, social media feeds, customer reviews, support tickets—it's an endless stream of text. Without NLP, all that valuable information is just digital noise.

NLP gives businesses the ability to analyze this ocean of text at a scale that would be impossible for any human team. It's the technology that filters spam from your inbox, powers the chatbot that answers your questions at midnight, and even allows tools like Elyx.AI to understand plain-English commands right inside a spreadsheet.

Simply put, NLP is the key to unlocking the immense value hidden within our everyday language.

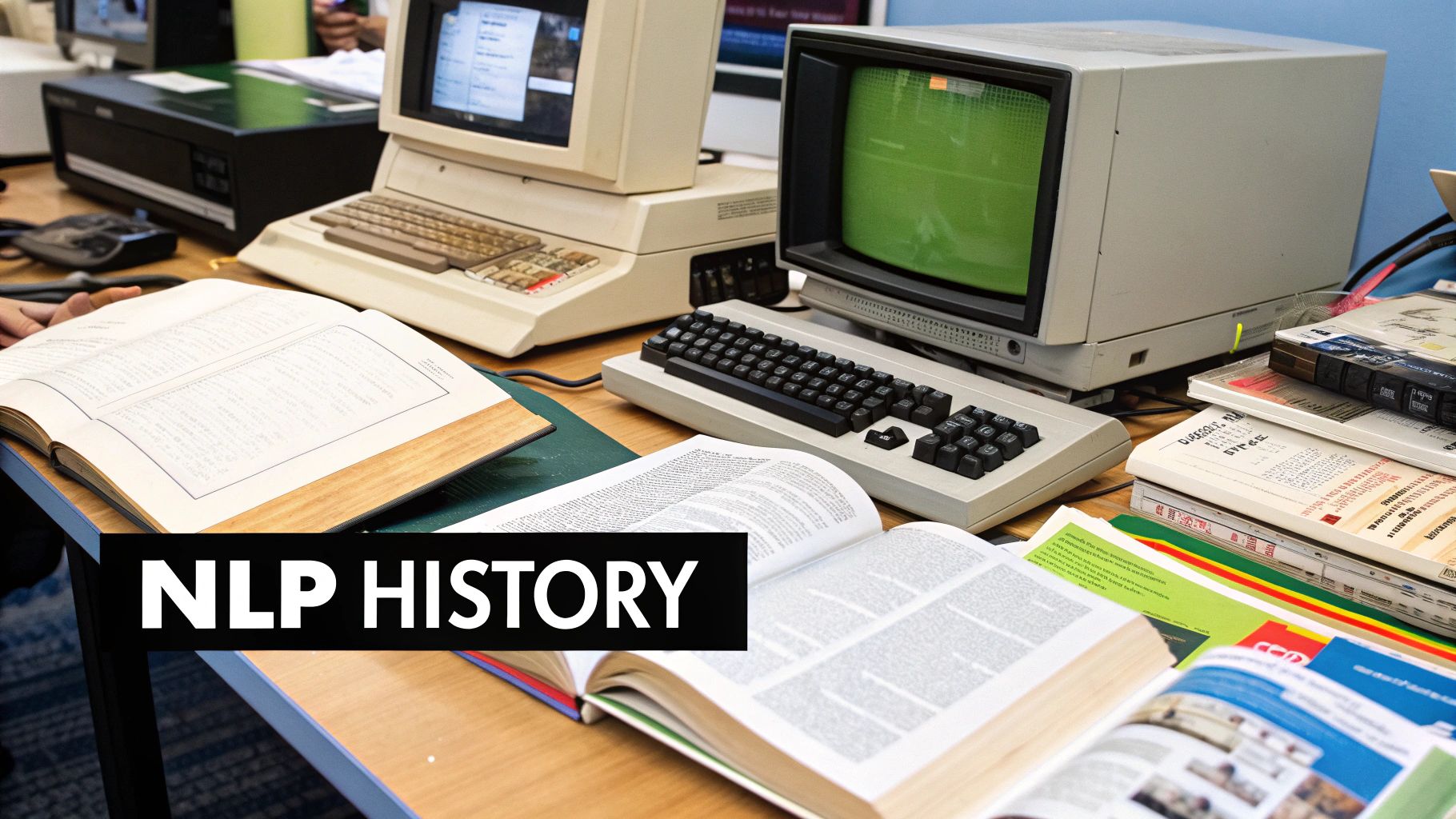

The Journey From Punch Cards To AI Conversations

The seamless conversations we have with AI today are a far cry from where Natural Language Processing started. Its roots stretch back to the 1950s, a time of colossal, room-sized computers that took their instructions from clunky punch cards. Back then, NLP wasn't a product; it was a bold scientific dream to see if a machine could ever truly get a handle on human language.

The ambition was huge. In 1950, Alan Turing set the stage with his famous Turing Test—a thought experiment to determine if a machine's conversation could be so natural it was indistinguishable from a human's. Just four years later, the Georgetown-IBM experiment made a concrete leap forward, successfully translating over 60 Russian sentences into English. This was a monumental first step. You can dig deeper into these early days and the history of natural language processing to see just how foundational they were.

This initial burst of optimism kicked off decades of research, pushing scientists to figure out how to program something as messy and unpredictable as language.

The Age Of Rules And Limitations

The first real attempt at NLP was what we now call a symbolic or rule-based approach. Throughout the 1960s, '70s, and '80s, computer scientists and linguists painstakingly built intricate sets of grammar rules and massive digital dictionaries. The thinking was simple: if you could give a computer a perfect instruction manual for a language, it ought to be able to understand it.

One of the most well-known examples from this era was ELIZA, a program from the mid-1960s that mimicked a conversation with a psychotherapist. ELIZA operated by spotting keywords in a user’s text and firing back a pre-programmed, scripted reply.

While fascinating for its time, this method had some serious flaws:

- It Was Brittle: Rule-based systems were completely thrown off by slang, typos, or grammatical mistakes—the very things that make human language, well, human.

- It Couldn't Scale: Imagine trying to write a rule for every single way a person could phrase a thought. It was a maintenance nightmare and practically impossible to make comprehensive.

- It Lacked True Understanding: These programs were just pattern-matchers. They couldn't grasp context, sarcasm, or the subtle meaning behind words.

These systems were like a tourist armed with only a phrasebook. They could handle simple, predictable exchanges but were utterly lost the second the conversation veered off-script. It was becoming clear that simply writing down rules wasn't going to cut it.

The core challenge shifted from teaching computers our rules to letting them learn our patterns. This transition from handcrafted logic to data-driven probability was the key that unlocked the next generation of NLP.

A New Chapter Driven By Data

The late 1980s and 1990s brought a seismic shift in thinking. Researchers started to ditch the rigid rules in favor of statistical models. Instead of programming a computer with the fact that "run" is a verb, they would feed it millions of sentences and let it figure out the probability that "run" acts as a verb in a specific context.

This statistical approach was a game-changer. By analyzing enormous collections of text, known as corpora, machines could start to learn language patterns, word relationships, and nuances all on their own. This data-driven method was far more flexible and resilient, capable of handling the beautiful mess of real-world language in a way the old rulebooks never could.

This leap from rules to statistics laid the groundwork for the machine learning and deep learning models that power the tools we use today. It turned NLP from a theoretical linguistic puzzle into a practical, powerful field of computer science.

How Computers Learn To Understand Language

To a computer, our everyday language is just a jumble of characters—a chaotic puzzle with no clear picture. Before it can even begin to grasp what we're saying, it has to tidy up the mess and organize all the pieces. This crucial prep work is called text preprocessing, and it’s the non-negotiable first step in any NLP task.

Think of it like being a chef. You wouldn't just toss an unpeeled onion into a stew. First, you have to peel away the useless skin, chop it into manageable bits, and get it ready for the pan. NLP models do something very similar with our words and sentences.

Deconstructing Language Into Building Blocks

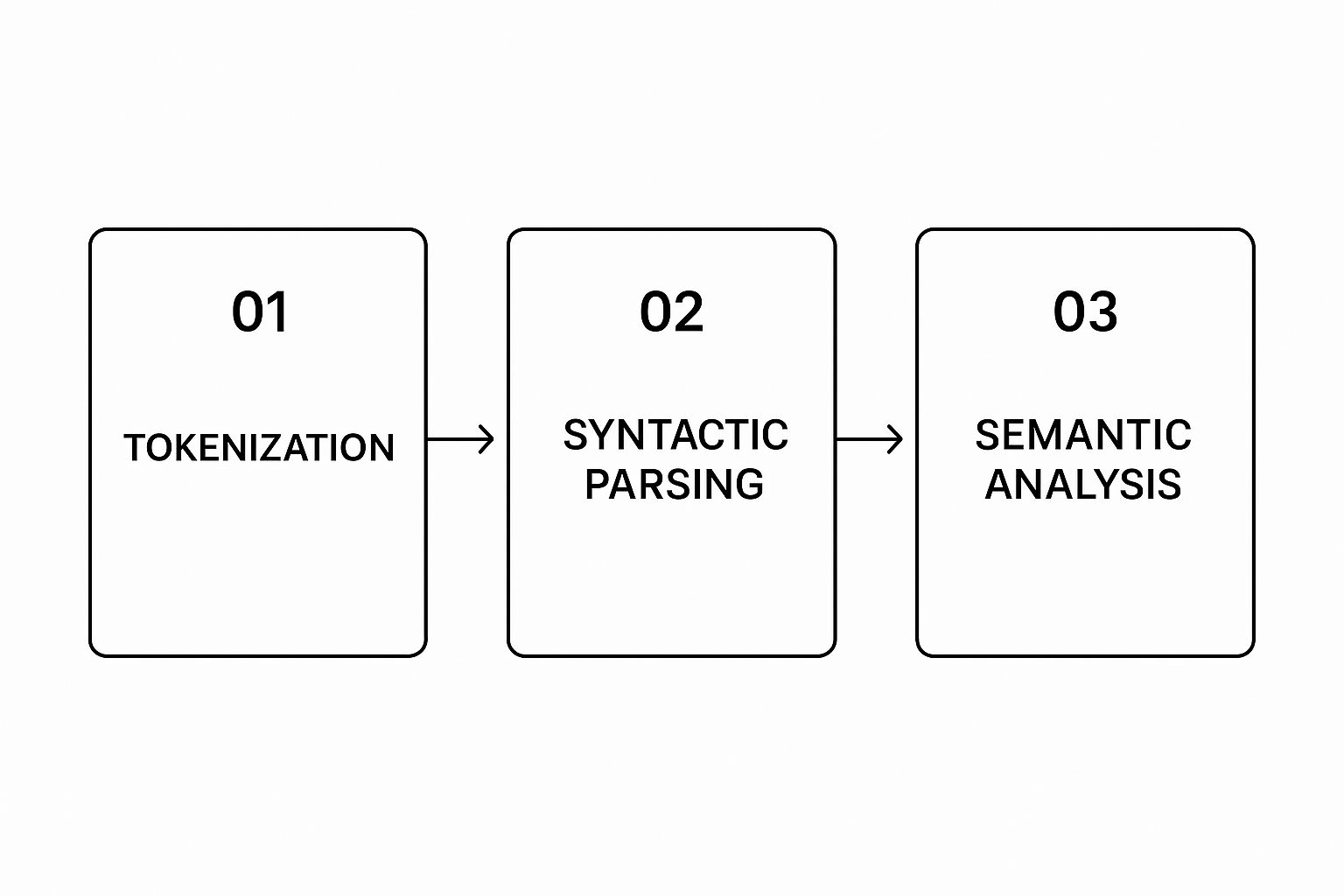

The first job is to break down long, complex sentences into smaller, more manageable parts. This isn't a single action but a series of precise steps designed to strip away the noise and turn messy human text into the clean, structured data a machine can actually work with.

This multi-step cleaning process includes:

- Tokenization: This is where the computer slices a sentence into individual words or “tokens.” For example, the sentence "I love my new phone!" becomes a simple list: ["I", "love", "my", "new", "phone", "!"]. It’s the very first cut.

- Stop-Word Removal: Next, the model gets rid of common, low-impact words like "the," "a," "is," and "in." Removing these “stop words” helps the system focus on the words that actually carry the most weight, like "love" and "phone."

- Lemmatization or Stemming: This clever step boils words down to their root form. Words like "running," "ran," and "runs" all get simplified back to the core concept: "run." This helps the machine understand that all these variations point to the same fundamental idea.

Only after the text is cleaned and organized can the real analysis begin. The infographic below shows how these initial steps flow into the deeper work of understanding.

As you can see, each stage builds on the one before it, taking us from raw text to meaningful interpretation. Once this preprocessing is complete, the model is ready to tackle the two main pillars of NLP.

The Two Pillars of Modern NLP

After tidying up the text, an NLP system essentially has two big jobs: understanding what's been said and then generating a response. These two functions, known as Natural Language Understanding (NLU) and Natural Language Generation (NLG), are the heart and soul of any conversational AI.

NLU is the "reading and comprehension" part. NLG is the "writing and speaking" part. You really can't have one without the other for a meaningful dialogue with a machine.

Let’s break down what each of these does.

Natural Language Understanding: The Reading Part

Natural Language Understanding (NLU) is all about one thing: figuring out the meaning behind the words. It's the cognitive engine of NLP. Once the text is tokenized and cleaned, NLU gets to work analyzing grammar, context, and the relationships between words to decode the user's intent.

For example, when you tell a chatbot, "Book a flight from New York to London for tomorrow," NLU is what identifies your intent (booking a flight) and extracts the key entities (New York, London, tomorrow).

NLU also handles more subtle jobs, like sentiment analysis, where it reads a customer review to figure out if the tone is positive, negative, or neutral. This process is conceptually similar to how you might approach data mining in Excel; in both cases, the goal is to pull structured, actionable insights from a mountain of unstructured information.

Natural Language Generation: The Writing Part

Once the system understands what you’ve asked for, it’s time for Natural Language Generation (NLG) to step in. NLG is the creative side of the duo, tasked with crafting a human-sounding response from structured data. It’s what gives the machine its voice.

If NLU is the brain that deciphers your request, NLG is the mouth that formulates the reply. It takes the computer's organized data—like flight schedules, weather reports, or a report summary—and converts it into fluent, natural sentences.

You see NLG in action all the time:

- Chatbot Responses: When a bot provides a helpful, coherent answer to your question.

- Automated Summaries: When a tool condenses a long article into a few key takeaways.

- Product Descriptions: When an e-commerce site generates unique marketing copy for thousands of items from a simple spreadsheet of features.

Together, NLU and NLG create a seamless conversational loop. NLU decodes our often-messy human language, and NLG crafts a clear, useful response, completing the cycle of communication.

The AI Models Behind Modern Language Technology

The leap from clunky, rule-based systems to the fluid conversational AI we use today wasn't a slow crawl. It was a complete rethinking of how machines could interact with language. Early NLP models were like meticulously organized dictionaries; they worked, but only if you followed their rigid, predefined rules for grammar and vocabulary. They couldn't handle the beautiful messiness of real human language.

The breakthrough came when we stopped trying to teach machines grammar rules and instead taught them how to learn from our language.

This shift was driven by statistical methods, moving the focus from "following a rule" to "calculating a probability." The 1990s marked a huge turning point with the rise of these statistical and machine learning techniques. Using algorithms like Hidden Markov Models (HMMs), systems began to model language statistically. The creation of the Penn Treebank corpus in 1996—a massive collection of over 4.5 million hand-annotated words—showed the industry was all-in on this new, data-first approach. You can get a deeper sense of this critical period by exploring a detailed timeline of NLP's evolution.

This statistical foundation set the stage for the much more powerful models that drive today's technology.

The Rise of Deep Learning and Neural Networks

The next major step forward came with deep learning and neural networks. These models, inspired by the interconnected neurons in the human brain, could uncover far more complex and subtle patterns in text than older statistical methods ever could.

Instead of just looking at the probability of one word following another, neural networks began to learn about context, syntax, and even meaning. This led to a much richer understanding of language, fueling huge improvements in everything from machine translation to sentiment analysis. They quickly became the new gold standard for building high-performing NLP systems.

But even these advanced models had a blind spot. While they were great at processing sequences, they often struggled with long-range dependencies—like connecting a pronoun at the end of a long paragraph back to the subject at the very beginning. One final piece of the puzzle was still missing.

The real game-changer wasn't just about processing words in order; it was about understanding which words matter most in any given context. This required a mechanism that could weigh the importance of different words, no matter where they appeared in a sentence.

Transformers: The Engines of Modern NLP

The arrival of the transformer architecture in 2017 was the moment everything changed. Models like Google's BERT (Bidirectional Encoder Representations from Transformers) and OpenAI's GPT (Generative Pre-trained Transformer) are all built on this foundation. They are the power behind the most impressive AI tools we have today.

So, what makes transformers so special? It all comes down to a component called the attention mechanism.

Think about how you read this sentence: "The delivery truck blocked the road, so it was late." Your brain instantly knows "it" refers to the "delivery truck," not the "road." The attention mechanism gives AI this same intuitive ability. It lets the model weigh the importance of all the other words in a sentence as it processes each new word, focusing on what’s most relevant.

This ability to zero in on contextual clues is what allows transformer models to do incredible things:

- Understand Ambiguity: They can figure out which meaning of a word is intended based on the words around it.

- Grasp Long-Range Context: They can connect ideas across long paragraphs or even entire documents, just like a human reader.

- Generate Coherent Text: By truly understanding the relationships between words, they can write fluid and logical sentences that make sense.

These advanced models are now the engines behind our most powerful NLP applications. From writing assistants that draft entire emails to chatbots that provide genuinely helpful customer support, the power of transformers is what makes modern AI feel so intelligent. They've pushed the field beyond simple word-matching into the realm of true language comprehension and creation.

Where You Already See NLP In Your Daily Life

You might think of Natural Language Processing as something out of a science fiction movie, but the truth is, it’s already a core part of your everyday routine. It’s the invisible engine working behind the scenes, making your digital life feel more intuitive and intelligent. You probably use it dozens of times before your first cup of coffee.

Think about the last time you asked your phone for directions. When you say, "Hey Siri, what's the traffic like?" you're kicking off a sophisticated NLP process. Your device doesn't just hear sounds; it grasps your intent (you need traffic info), pulls out the key details, and then formulates a genuinely helpful, spoken answer. That whole interaction? Pure NLP.

Your Inbox and Your Voice Assistants

Every day, NLP acts as a quiet digital assistant, sorting, filtering, and even communicating for you. It’s what makes complex tasks feel surprisingly simple.

Two of the most common places you’ll find it are your email and the smart assistants on your phone or in your home.

- Smart Email Features: Ever wonder how Gmail knows to tuck a flight confirmation into your "Travel" folder? That’s NLP classifying text. The same goes for those handy one-click replies like "Sounds good!" or "I'll be there." It's predicting what you’re likely to say next. And, of course, it's the muscle behind spam filters, analyzing message content to keep your inbox clean.

- Virtual Assistants: Assistants like Siri, Alexa, and Google Assistant are NLP powerhouses. They rely on advanced models to juggle speech recognition (figuring out the words you said), natural language understanding (what you actually meant), and natural language generation (crafting a coherent response).

These tools are perfect examples of how NLP translates messy, nuanced human language into structured commands a machine can act on.

Powering Customer Service and Business Insights

Beyond just personal convenience, NLP is a game-changer for businesses, especially in how they listen to and support their customers. It gives them the ability to handle communication and analyze feedback on a scale that was simply impossible before.

When you land on a website and a chat window pops up asking, "How can I help you?", you're almost certainly talking to an NLP-powered chatbot. These bots are trained to handle common questions, troubleshoot issues, and pass more complex problems to a human, making customer support faster and more efficient.

NLP is the engine that turns massive volumes of unstructured text—from customer reviews to social media comments—into structured, actionable data. It finds the signal in the noise.

This is how a company can sift through thousands of product reviews to instantly understand public sentiment. It can spot recurring complaints or identify the features people absolutely love, all without a team of people reading every single comment. To see more examples of how businesses are putting AI to work, check out the Elyx.AI blog.

The entire field has been supercharged by recent breakthroughs. The 2010s brought a huge shift with the development of deep learning and powerful transformer models like Google's BERT and OpenAI's GPT family. These models shattered old performance records for tasks like translation and summarization, helping turn NLP into a global industry now worth over $20 billion. It's this booming market that's fueling the very applications we now take for granted.

Transforming Industries Like Healthcare and Finance

NLP's reach extends far into specialized fields, fundamentally changing how professionals work with high-stakes information. Its ability to read and comprehend expert-level language is unlocking new efficiencies and discoveries.

In healthcare, for instance, doctors and researchers use NLP to scan enormous collections of clinical notes and medical records. This helps them identify patterns in patient outcomes, flag potential disease risks, and drastically speed up research by finding relevant studies among millions of publications.

It’s a similar story in the finance industry, where NLP algorithms perform sentiment analysis at a massive scale. These systems digest news articles, social media chatter, and financial reports in real-time to gauge market attitudes toward a stock or the economy. This gives traders and analysts an invaluable edge for their investment strategies. From your email to the emergency room, NLP is an essential tool for making sense of our world's ever-growing flood of text.

Where Human and Computer Conversation is Headed

Natural Language Processing has come a long way. What started as a fascinating academic puzzle is now a core technology that bridges the gap between human language and machine intelligence. We've seen how it can clean up messy text, figure out what we mean, and even generate surprisingly fluent responses.

But this is just the beginning. The evolution of NLP is accelerating, and the most exciting developments are still unfolding. The future isn't just about smarter chatbots; it's about making technology a genuinely helpful and intuitive partner in our daily lives.

The Next Wave of NLP

We're quickly moving past the era of simple commands and rigid, scripted conversations. The next generation of NLP is all about grasping deeper context, being more inclusive, and understanding our world in a much more complete way.

Here’s a glimpse of what's coming:

- Hyper-Personalized AI: Think of an AI assistant that doesn’t just remember your favorite playlist. Imagine one that understands the context of your current project at work, recalls details from your conversations last week, and anticipates your needs based on your long-term goals. That's where we're headed.

- Truly Multilingual Models: Future systems won't just offer literal, word-for-word translations. They'll understand cultural nuances, local dialects, and idiomatic expressions, finally making global communication feel natural and seamless.

- NLP Meets Computer Vision: This is where language and sight come together. An AI could describe a complex scene in a photograph, explain the action happening in a live video feed, or help you find a specific item in a cluttered room based on a simple verbal description.

The real aim is to shift from just processing language to truly understanding communication. This means getting the full picture—not just the words spoken, but the context, the intent, and all the unsaid things that shape a conversation.

Of course, this journey isn't without its hurdles. We have a serious responsibility to tackle challenges like ensuring fairness, rooting out bias from the data these models learn from, and establishing clear ethical rules. As NLP gets more powerful, the need for solid business intelligence best practices becomes absolutely critical to guide how we use it responsibly.

Ultimately, the future of NLP is about making technology feel less like a tool we operate and more like a natural extension of our own ability to communicate. The goal is a world where our devices don't just hear us—they truly understand us.

Got Questions About NLP? We’ve Got Answers.

As you get your head around what natural language processing is, a few questions always seem to pop up. It’s a field that overlaps with big ideas like AI and relies on some very specific tools, so let's clear up some of the most common points of confusion.

What’s the Difference Between AI, Machine Learning, and NLP?

It’s easy to get these terms tangled up, but they fit together quite nicely. Think of them as Russian nesting dolls, with each one sitting inside the other.

- Artificial Intelligence (AI) is the biggest doll—the whole field dedicated to making machines that can think or act in ways we’d consider “smart.”

- Machine Learning (ML) is the next doll inside. It's a type of AI that isn't programmed with rigid rules. Instead, you give it a ton of data, and it learns how to perform a task on its own.

- Natural Language Processing (NLP) is the smallest doll in the center. It’s a specialized part of AI that almost always uses machine learning to specifically focus on one thing: understanding human language.

So, when you hear about NLP, you're really talking about a slice of the AI pie that uses ML to make sense of the words we write and speak. They aren't competing ideas; they work together.

At its heart, NLP is simply a form of AI that uses Machine Learning to figure out what we mean. All three are essential for building the smart language tools we rely on daily.

What Programming Languages Are Best for NLP?

You could technically use a few different languages for NLP, but let's be honest—one completely dominates the field. Python is the undisputed king. It’s not just because the language itself is relatively easy to pick up; it's the incredible collection of free, open-source tools built specifically for it.

This powerful ecosystem is what really sets Python apart. A few of the heavy hitters include:

- NLTK (Natural Language Toolkit): This is often where people start. It's fantastic for learning the ropes and experimenting with fundamental NLP concepts.

- spaCy: When you need to build something for the real world, spaCy is a go-to. It’s built for speed and efficiency, perfect for production systems.

- Transformers by Hugging Face: This library changed the game by giving developers easy access to thousands of powerful pre-trained models like BERT and GPT.

While other languages like Java and R have their own NLP tools, the sheer momentum, community support, and power behind Python's libraries make it the default choice for nearly everyone in the field.

What Are the Biggest Hurdles for NLP?

For all its amazing progress, NLP still stumbles over some of the same things that make human language so rich and interesting. One of the toughest problems is ambiguity. Think about the sentence, "I saw a man on a hill with a telescope." Who has the telescope? You? The man? Is the telescope just on the hill? We use context to figure this out instantly, but for a machine, that’s incredibly difficult.

Another massive challenge is data bias. NLP models learn from the text we feed them—and the internet is filled with human biases. If a model is trained on data that contains unfair stereotypes about gender, race, or culture, it will learn and even amplify those biases in its own responses. A huge part of current NLP research is focused on finding ways to build fairer, more ethical systems.

Ready to put the power of NLP to work in your own spreadsheets? Elyx.AI is an AI-powered Excel add-in that lets you clean data, pull insights, and even translate text just by typing simple commands. Stop wrestling with formulas and start talking to your data. Visit https://getelyxai.com to see how it works and begin your free trial.

Reading Excel tutorials to save time?

What if an AI did the work for you?

Describe what you need, Elyx executes it in Excel.

Try 7 days free